Performance optimization of web applications is a hot topic these days. One of the related areas is of course optimizing the application code itself. For client-side application running in the web browser, this means speeding-up JavaScript code whenever possible. Premature optimization is not a good practice, it is crucial to locate which parts cause the slow down and need some improvement. Enter the logical first step of every optimization: initial performance analysis.

Sampling

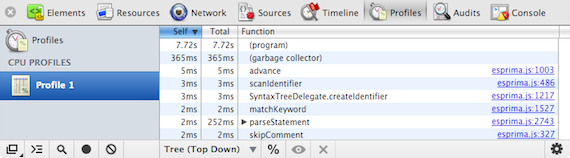

Are you familiar with a JavaScript profiler? Many modern browsers already include a very useful profiler to look for JavaScript performance problem. The workflow is like this. First, you press a button to start the full performance monitoring of your application. After the application runs for a while, you can press another button to stop the monitoring. The outcome is something top-down like the following screenshot, the breakdown gives a very useful information on where your application spends the most time.

Under the hood, activating the monitoring causes the JavaScript engine to kick an sampling profiler. It is named that way because the profiler will look at the state of the virtual machine at a predefined interval, e.g. 1 ms in case of V8. The important state information will be collected and later it will be used to construct the profiling view as depicted in the above screenshot.

When carrying out a profiling like this, be advised of the observer effect. The extra monitoring adds a certain overhead to the code execution and hence, the actual timing would be different to the case where there is no instrumentation at all. The difference is usually minimal, in particular since the entire application is affected. Yet, take that into account as you move forward to make any conclusion.

Tracing

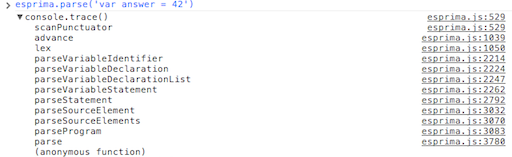

While timing information obtained from the profiler is useful, sometimes you are also interested not in how fast a certain operation is carried out, but also what happens during that time. This is like doing an X-ray on your program execution. Fortunately most modern browsers support the console API console.trace will give you exactly that information:

Another way to gather the traces is by instrumenting the JavaScript source. This is a technique I’ve used to find out the exact execution sequence when an application starts. For example, in that experiment I found out that a simple jQuery Mobile site will invoke over 4000 function calls. Note that the number of calls itself does not tell you much. However, tracking this over time, or even for every check in, can be really helpful. For example, if suddenly someone commits a bug fix which brings the function calls to 8000, this should trigger a red flag. That can be one of your protective multilayer defense.

Scalability is another excellent area of application tracing. If you have an address book application, sorting the contacts the alphabetically can be really fast if you only have 10 entries. However, here you are not interested in the absolute time of the sorting. You also want to know how it handles the address book with 100 entries, 1000 entries, and so on.

Formal analysis of the complexity can be complicated or prohibitively expensive. This is where empirical run-time analysis kicks in. For example, you can instrument Array#swap and plot the number of function calls vs address book entries. If your team member implemented it using bubble sort instead of something faster like binary sort, that chart will reveal it.

Timing

After you locate the problematic spot in the performance, the next step is obviously to fix it. In many cases, speeding up some parts of the application is not really difficult. In other cases, you have to try different strategies and see which one fits the performance criteria. Often, it is as simple as figuring out which implementation is the fastest. This is where timing the execution of a function is useful.

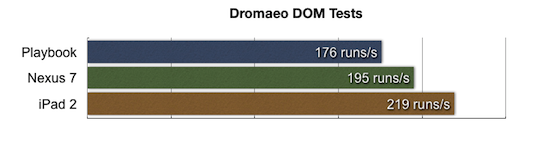

Accurate timing is far from trivial, this has been covered in many articles, e.g. Bulletproof JavaScript benchmarks. Unless you have a lot of time to learn statistics and uncover cross-browser secrets, it is easier to use a ready-made benchmark library such as Benchmark.js. For a quick comparison, using the popular JSPerf (which uses Benchmark.js under the hood) is highly recommended. You would also have the chance to try it on different browsers and devices, just to ensure that your strategy is not biased towards a particular implementation.

Addendum: Pay attention to timing accuracy and precision! You need to measure the right thing (accurate) and with a confident level of repeatability (precise).

Beside careful timing, it is also important to pay attention to the benchmark corpus. Whenever possible, choose something which resembles its real-world usage. For example, if you try different ways to sort the contacts in the address book application, make sure you supply a representative list for the benchmarks, more than just a useless array of ['a', 'b', 'c'].

Last but not least, remember that optimization is not the destination, it is a journey!