It’s lunch time. You enter your favorite cafe and place an order for your beloved sandwich. Nobody else is there so your tasty meal is ready in some minutes. The next day, the same situation. This time, there are few other customers in front of you. You wonder if your waiting time (for the sandwich to arrive) remains the same or if it increases linearly or even quadratically. In other words, you are curious about the scalability of the sandwich preparation process.

This is the topic I’ve touched before. In complex client-side and server-side JavaScript-heavy application, the absolute running time of a critical procedure is important but that is not the only important thing. The performance of the system also depends on the ability of that critical procedure to scale as the input data grows.

Another real-world example here is given for Esprima, the ECMAScript parser I’m deeply involved with. From the very beginning, the performance of the parser is a major concern. The running-time of the benchmarks suite is something that we watch closely. The corpus of the benchmarks suite itself is a collection of popular libraries, from Underscore to jQuery Mobile.

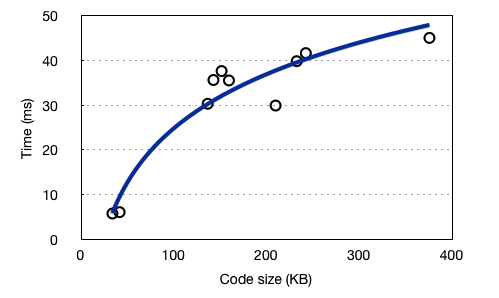

A formal analysis of the time complexity of the parsing code will be beyond my limited math skills. In addition, it could be extremely time consuming and not worth the effort. A practical alternative is doing a representative empirical analysis. For Esprima’s benchmarks suite, plotting the parsing time as a function of the code size gives the following chart:

Due its recursive descent approach, it should be possible to craft a special source which abuses Esprima parser by carefully exhausting the call stack. However, for parsing real-world code, as demonstrated in the benchmark corpus, we can be quite content that there is a comfortable upper bound as to when it is going to finish. Even though Esprima is provided “as is” and without any warranties, somehow its run-time scalable behavior makes me sleep better at night!