Complicated code is difficult to digest and hard to maintain. The best way to avoid it is by not having it at the first place. For web applications written in JavaScript, fortunately we have enough tooling machinery to detect complex code and block it from the source repository.

Analyzing code complexity is rather easy these days with the project like JSComplexity. Even better, the complexity metrics can be visualized in a good-looking and interactive report showing different metrics from McCabes cyclomatic complexity to Halstead complexity measures. Both these tools are easy to setup, they run quite well on the command-line (using Node.js).

A preventive approach to force a complexity threshold on a new code is by using complexity comparison. With JSComplexity, this can be done via its --maxcc argument (there are other useful options as well, refer to its README for more details). If the package complexity-report is installed globally, this checking is easy as:

cr --maxcc 15 index.js

To inject this in the multilayer defense workflow, simply include that check as part of e.g. Git precommit hook. If your project relies on Node.js for the test (i.e. via npm test), it is also useful to integrate this check. First of all, ensure that complexity-report package is within the devDependencies section of your package.json and then add a new entry for the scripts section, such as:

"complex": "node node_modules/complexity-report/src/cli.js --maxcc 15 index.js"

which permits running the check by npm run-script complex. Now it is a matter of inserting this step in the main test of the scripts section. If there is a function in index.js which has a cyclomatic complexity more than 15, that tool will complain and therefore cause the entire test to fail.

While this already serves as a good complexity filter, we can bring it to the next level. For a start, it would be better if we have a clear understanding of the most complex functions. That way, it is easier to spot the worse offenders and have them fixed first. Again, this just requires a quite simple script, as illustrated from Esprima’s implementation of tools/list-complexity.js.

var cr = require('complexity-report'),

content = require('fs').readFileSync('index.js', 'utf-8'),

list = [];

cr.run(content).functions.forEach(function (entry) {

list.push({ name: entry.name, value: entry.complexity.cyclomatic });

});

list.sort(function (x, y) {

return y.value - x.value;

});

console.log('Most cyclomatic-complex functions:');

list.slice(, 6).forEach(function (entry) {

console.log(' ', entry.name, entry.value);

});

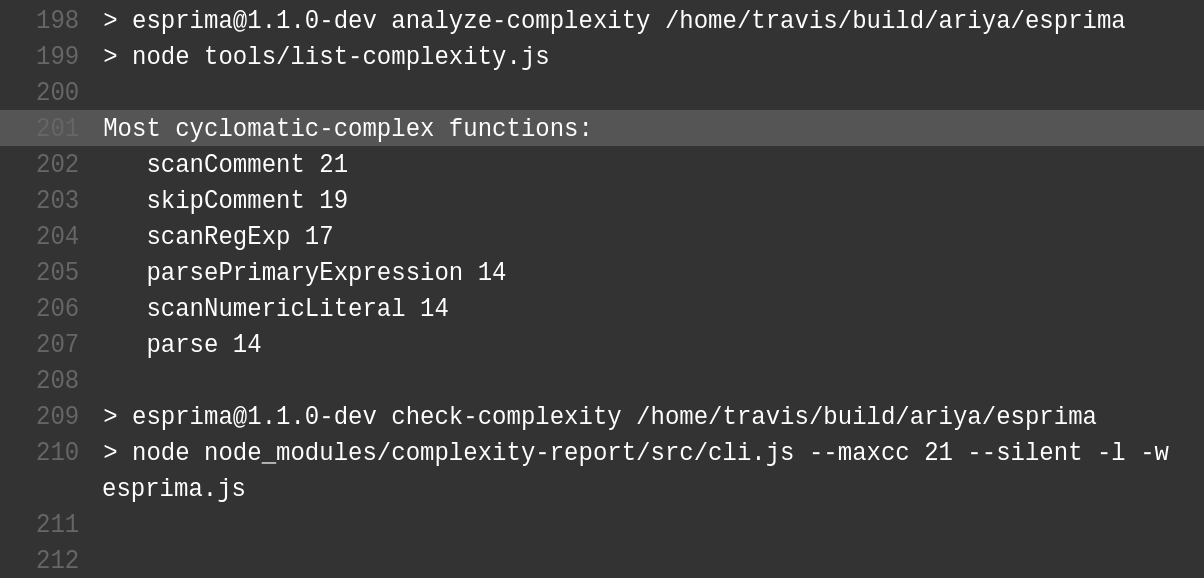

Running the above script will list 6 functions which have the highest cyclomatic complexity. An example output is illustrated in the following screenshot, taken verbatim from Travis CI build report of Esprima.

Because Travis CI can run the tests on every GitHub pull request, such a report becomes very valuable when doing code review. If someone introduces a complex piece of code, it will be blatantly obvious. This preemptive defensive layer avoids unintentional merge of unreadable handiwork.

Complexity analysis costs almost nothing. Why not always doing it where it makes sense?