While a build system is always critical to the success of a software project, maintaining such a system is not always fun. Hence, we tend to investigate many different ways to reduce the maintenance effort. Thanks to Docker, there is a possibility to have the build agent itself very simple because it does nothing but to spin and run a Docker container.

Imagine if you are a Python shop and suddenly you have an engineer trying to experiment with Go for the new REST API server. It is certainly possible to retrofit your build infrastructure to include Go development tools and dependencies. But what if another environment and other frameworks are also needed? It is not scalable (process-wise) to always bug your build/release engineers and bug them with these (continuous) requirements.

Imagine if you are a Python shop and suddenly you have an engineer trying to experiment with Go for the new REST API server. It is certainly possible to retrofit your build infrastructure to include Go development tools and dependencies. But what if another environment and other frameworks are also needed? It is not scalable (process-wise) to always bug your build/release engineers and bug them with these (continuous) requirements.

In a configuration that involves a server-agent setup (or in Jenkins lingo, master-slave), the agent is the one that does the backbreaking work. In the previous blog post, Build Agent: Template vs Provisioning, I already outlined the most common techniques to eliminate the need to babysit a build agent. I am myself is a big fan of the automatic provisioning approach. Like what Martin Fowler wrote about Phoenix Server:

A server should be like a phoenix, regularly rising from the ashes.

When a build agent misbehaves due to a configuration drift, we shall not bother to troubleshoot it. We simply terminate that troublesome phoenix and let it regenerate (thanks to the provisioning mechanism). For another rather philosophical aspect on this approach, read also my other blog post on A Maturity Model for Build Automation.

The Container is the Phoenix

If many of your build agents share the same trait, e.g. they are mostly a Linux system (often in its virtualized form, e.g. an EC2 instance) with assorted different tools (compilers, libraries, frameworks, test systems), then the scenario can be further simplified. What if the build agent is not the actual Phoenix? What if the build agent is only the realm where the phoenix lives (and dies)?

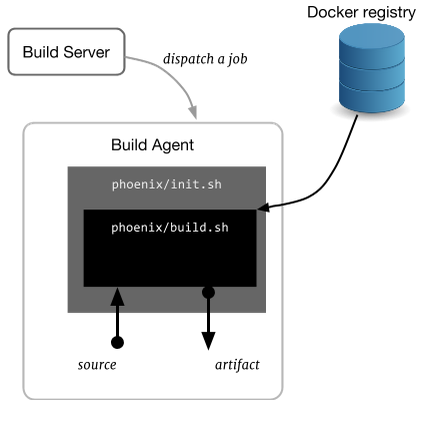

In this situation, a Docker container becomes the real phoenix. Every project will need to supply some additional information (imperative: in the form of a script, declarative: common configuration understood by the build tool) necessary for the build agent: which container to be used and how to initiate that in-container build.

Let’s take a simple project and setup a build using this Docker and Phoenix approach. For this example, we will build a CPU feature detection tool (implemented using C++). If you want to follow along, simply clone its git repository gitlab.com/ariya/cpu-detect and pay attention to the phoenix subdirectory.

There are two shell scripts inside the phoenix subdirectory: init.sh and build.sh.

The first one, init.sh, is the one to be executed by the build agent. It pulls the container used to execute the actual build step. Since this is a C++ project, we will leverage the gcc container. After that, it runs the container with a volume mapping so that /source inside the container is mapped to the git checkout directory. When the container is launched, it also executes the other script build.sh (referred as /source/phoenix/build.sh since we are now inside the container).

If we simplify it, the whole content of init.sh can be summarized as:

docker run -v $SOURCE_PATH:/source gcc:4.9 sh - c "/source/phoenix/build.sh"

The second script, build.sh, is not executed by the build agent directly. It will run inside the specified container, as described above. The main part of build.sh is to run the actual build step. For this project, it only needs to invoke make (in a real-world project, a battery of tests must be part of this). Before that, the script needs to prepare a build directory and copy the original source (remember, /source inside the container corresponds to the git checkout). Once the build is completed, the build artifact has to be transferred back. In this case, we just copy the generated cpu-detect executable.

If any step during this process fails, including make itself, then the whole process will be marked as a failure. This automatic propagation of status eliminates the need for a custom error handling.

To test this setup, have a box with Docker ready to use and then launch phoenix/init.sh. If everything works correctly, you will see an output like the following screenshot.

If you experience some Inception moment trying to follow the steps, please use the following diagram. It is also a useful exercise to adopt those two phoenix scripts to your own personal project.

Agent of Democracy

In the above example, we pull and run a ready-to-use gcc container. In practice, you may want to come up with a set of customized containers to suit your need. Hence, it is highly recommended that you setup your own Docker registry to be used internally. This becomes a private registry and it should not be accessible by anyone outside your organization. Here is how your init.sh might look like incorporating the technique:

REGISTRY="docker.mycompany.com"

IMAGE="golang"

TAG="1.4"

CONTAINER="${REGISTRY}/${IMAGE}:${TAG}"

echo "Container to be used: $CONTAINER."

docker pull $CONTAINER

echo

Now that the build process only happens inside the container, you can trim down the build agent. For example, it does not need to have packages for all development environment, from Perl to Haskell. All it needs is Docker (and of course the client software to run as a build agent) and thereby massively reducing the provisioning and maintenance effort.

Let’s go back to the illustrative use case mentioned earlier. If an engineer in your team is inspired to evaluate Go, you do not need to modify your build infrastructure. Just ask them to provide a suitable Go development container (or reuse an existing once such as google/golang) and prepare that phoenix-like bootstrapper scripts. The same goes for the new intern who prefers to tinker with Rust instead. No change in the build agent is necessary! Everyone, regardless the project requirements, can utilize the same infrastructure.

In fact, if you think through this carefully, you will realize that all those Linux build agents are not unique at all. They all have the same installed packages and no agent is better or worse than the others. There is no second-class citizen. This is democracy at its best.

Parametrization and Resilience

Knowing the build number and other related build information is often essential to the build process. Fortunately, many continuous integration systems (Bamboo, TeamCity, Jenkins, etc) can pass that information via environment variables. This is quite powerful since all we need to do is to continue to pass that to Docker. For example, if you use Bamboo, then the invocation of docker needs to be modified to look like (notice the use of -e option to denote an environment variable).

docker run -v $SOURCE_PATH:/source \

-e bamboo_buildNumber=${bamboo_buildNumber}\

$CONTAINER sh - c "/source/phoenix/build.sh"

Another side effect of this Docker-based build is the built-in error recovery. In many cases, a build may fail or it gets stuck in some process. Ideally, you want to terminate the build in this situation since it warrants a more thorough investigation. Armed with the useful Unix

Another side effect of this Docker-based build is the built-in error recovery. In many cases, a build may fail or it gets stuck in some process. Ideally, you want to terminate the build in this situation since it warrants a more thorough investigation. Armed with the useful Unix timeout command, we just need to modify our Docker invocation:

TIMEOUT=2m

echo "Triggering the build (with ${TIMEOUT} timeout)..."

timeout --signal=SIGKILL ${TIMEOUT} \

docker run -v $SOURCE_PATH:/source \

$CONTAINER sh - c "/source/phoenix/build.sh"

By the way, this is the reason why there is an explicit docker pull in init.sh. Technically it’s not needed, but we use it a mechanism to warm up the container cache. This way, the time it takes to initially pull the container will not be included in that 2-minute timeout.

With the use of timeout, if the Docker process would not complete in 2 minutes, it will be terminated with SIGKILL, effectively aborting the whole step at once. Since the offending application is isolated inside a container, this kind of clean-up also results in a really clean termination. There is no more server hanging out doing nothing because it was not killed properly. There is no stray zombie process eating the resources in the background.

Summary: Use Docker to modify the build agent to be a realm where your phoenix lives and dies. After that, turn every build process into a short-lived phoenix.