Even though modern computing hardware is getting faster by days, the performance of a software starts to be treated as a feature. Optimization effort, whether it is for mobile applications or for high-volume web sites, generates tons of jobs in the industry. Squeezing the last drops of performance becomes a hot trend.

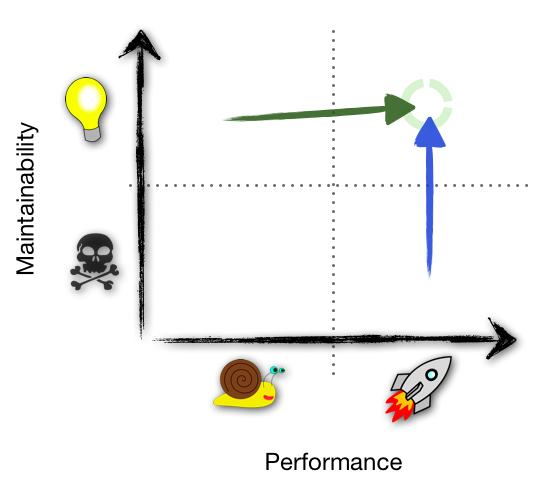

What I learn from several large-scale software projects is that optimization should be treated as part of the journey. It is not a goal per se, your destination is not to reach the intended performance criteria. If we draw the quadrant of maintainable vs optimized code, we may end up like the following diagram. Note that the dichotomy does not always apply in real-world; for this purpose however, it does illustrate the point.

In the beginning…

Obviously, the dream of every software team is to produce a very readable code which also performs magically well. This may not be always the case, in particular because not every engineer is a rock star. High performance code that is also easy to follow is an ideal case and if you can hit that on the first attempt, keep up the good work.

Another scenario is where you start from a clean implementation of a concept. This version follows the design faithfully, it does resemble the vision of the product. Since it comes pretty much fresh from the specification, the code is optimized for conformance and not necessarily for speed. At this point, the fun with the optimization begins. Steps are taken to ensure that the performance is getting some improvement along the way. This is the journey denoted with the the green arrow in the above diagram.

An equally challenging starting point is when there is already a fantastic piece of code which runs extremely well. However, that code is badly written, hard to digest, not well commented, or simply unmaintainable (or worse, the combination thereof). This often happens as the organization adopts a foreign library, e.g through an acquisition. The usual clean-up kicks in and the code will be refactored so that it adheres to the new hygiene, as depicted by the blue arrow in the illustration.

The final possibility is when you have to deal with the worst state: incomprehensible and dog-slow. In this case, either rewrite everything from scratch or do a massive review so that it reaches some sensible state before even trying to optimize anything.

Incremental is the new black

In the previous two starting points, performance improvement is very often designed with some specific goals in mind. It can’t be a vague objective, it needs to be reproduceable and measureable. The typical strategy is to create a performance test suite which acts as the benchmark baseline. Every attempt to speed-up various aspects of the application must ensure that the benchmark score increases. Thus, it’s also equally important to have the representative tests in that benchmark suite, something that resembles real-world usages from the customer and not just some synthetic setup.

Generally, it’s a good practice to have a series of small commits than huge change that touches everything. For performance improvement, this gets even more important. Many optimization tricks are about compromise. Over the time, the compromise changes and therefore it is imperative to revisit again all the hacks which have been done to fulfill the obsoleted compromise. This activity is possible only if every single tweak is isolated and easily accounted for. Undoing and redoing a big commit which may or may not impact different parts of the application is a risky move. Not only that, but the technology and the environment very often improve with time. For example, JavaScript developers were used to the trick of caching array length:

var i, len;

for (i = , len = contacts.length; i < len; ++i) {

// do something

}

These days, JavaScript engines are smart enough to understand this case and the non-cached version will perform equally well.

If you start from the performant-but-unreadable quadrant, iterative clean-up will reveal how far you can sacrifice the performance to gain future maintainability. Again, a trade-off must be set. For example, are you willing to have 5% penalty by changing this code fragment into something which is easier to review?

void hsv_to_hsl(double h, double s, double v, double* hh, double* ss, double *ll)

{

*hh = h;

*ll = (2 - s) * v;

*ss = s * v;

*ss /= (*ll < = 1) ? (*ll) : 2 - (*ll);

*ll /= 2;

}

Over the time, the readability scale can change as well. If everyone becomes really good at mastering the language, the above construct may become very natural and therefore it is OK to keep this form and abandon the longer version.

Dropping a 500-line patch with a comment such as “Rewrite, 3x faster now” is not a recommended act in today’s craftsmanship standard. Record the optimization steps in a logical series of check-ins which also mirrors your train of thought. Think of other people, and that also includes the Future version of you!