This is the follow-up to the first part of my writing on memory efficient DOM implementation for KOffice. Now my intention is to find out whether real-time compression can help to further reduce the memory consumption.

XML compression is a whole field of research. There are a bunch of compressor available, from XMill, XMLZip, XMLPPM, XGrind, XML-Xpress, Exalt, TREECHOP and many others. Since I’m not a scholar in this field (I already have my own graduate research to take care of), I have chosen a direct and brutal approach: just compress the packed nodes representation and decompress them on-the-fly. While iteration is very common, simple cache buffer for decompressed neighbouring nodes is implemented so there is no need to always decompress every single node. This method easily fulfills the requirements that I set: no need to load the whole XML (because it intercepts SAX events), allows queries and iteration (due to the QDom-like wrapper), and can be conveniently tested with the compression switched off.

There are many algorithms suitable for fast compression and decompression. Byte-aligned Lempel-Ziv is a logical and obvious choice. Again, I have very much zero knowledge about data compression. There are Ross Williams’s LZRW1, Herman Vogt’s LZV, Markus Oberhumer’s LZO, Marc Lehmann’s LZF and many others. LZRW1 is well-known although probably covered by patents. LZV has the poorest compression ratio so it goes out of the list. LZO’s compression is the best, at the price of a bit more complicated state-machine, but my own implementation (since the reference implementation is GPL only) is not so fast and well debugged. LZF, the successor to LZV, is a good contender and already used (among others) in suspend2 project. On fairly modern machine with large CPU cache and reasonable branch predictor, liblzf (the reference implementation of LZF) is much slower than LZRW1. So what I did is to recreate the compressor. Armed with Valgrind’s Cachegrind, my new implementation is finally faster than LZRW1.

What I am really eager to try (but no time for that yet) is Wilson-Kaplan algorithm. At least the WK4x4 and WKdm variants have been implemented for Linux compressed caching project. And since the compression/decompression inside KoXmlReader is actually involving in-memory data, this fast and low-overhead WK algorithm should be a good candidate. There are assorted patent applications for such cache compression, but I wonder whether the algorithm per se is patented.

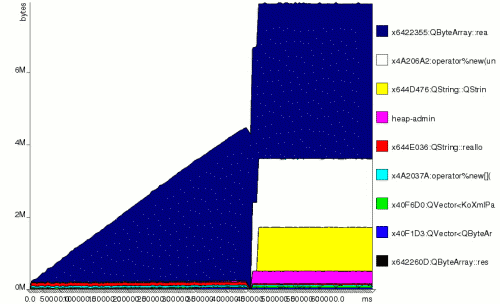

Now on to the result, starting with the worst test document: Frequencies.ods. Just to remind you, loading and iterating this document (19 MB XML) takes 250 MB if we’re using QDom but only 40 MB with KoXmlReader. Here what Valgrind/Massif reported when the compact form of the DOM is compressed and decompressed on the fly. At most, this barely consumes 8 MB. Compared to 250 MB, 40 MB is good enough, but 8 MB is great, isn’t it?

Interesting is, during the parsing phase, the heap allocation increases only (almost) linearly.

The full comparison with other documents is shown below. Amazing to see how short the violet bars are.

(Update: this chart is not available anymore due to a data loss)

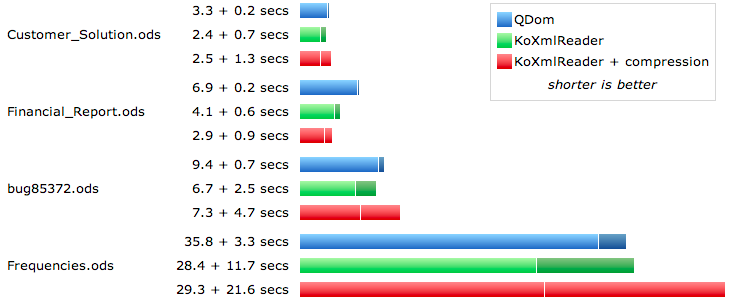

As for speed, regardless the lightning fast packing and unpacking, there’s some penalty. This graph below summarizes the timing required for parsing (light bars) and iterating (dark bars). Compare to what was shown in part 1, I only added the result for KoXmlReader with compression. Small documents are omitted because processing time is short anyway.

Of course, all these graphs must be taken with a grain of salt. But my objective is only to find out whether this all makes sense, not to write a full-brown research paper. So far I’m quite happy with the result.

It can be seen that KoXmlReader is a feasible alternative for QDom (in KOffice) as it does not incur any significant performance penalty yet the memory footprint in the worst case (Frequencies.ods) can only be one sixth (40 MB) compared to that of QDom (250 MB). We also see the additional benefit of using real-time compression. Compared to plain KoXmlReader, although in the worst case it is 1⁄5 slower (and there is still room for further improvements here), the allocated heap is only one fifth (8 MB vs 40 MB). Or even 1⁄30 if you bravely compare it against QDom (8 MB vs 250 MB).

I guess we should empirically find out how large the average documents that most people are working with. If these are less than the worst case that I tested, then enabling compression by default is a good thing because the performance penalty won’t be noticable. If not, even without compression it is already much more better than using QDom.

Other than that, my aim now is to fully integrate KoXmlReader in KOffice. Hopefully someday bug #59510 can really be closed.